The lawsuit that could rewrite the rules of AI copyright

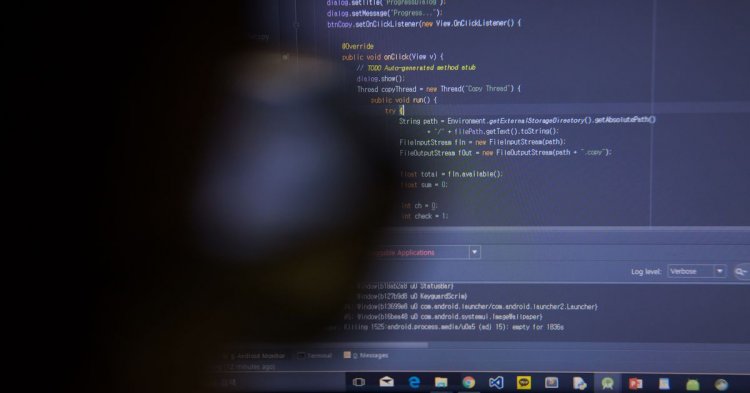

Microsoft, its subsidiary GitHub, and its business partner OpenAI have been targeted in a proposed class action lawsuit alleging that the companies’ creation of AI-powered coding assistant GitHub Copilot relies on “software piracy on an unprecedented scale.” The case is only in its earliest stages but could have a huge effect on the broader world of AI, where companies are making fortunes training software on copyright-protected data.

Microsoft, its subsidiary GitHub, and its business partner OpenAI have been targeted in a proposed class action lawsuit alleging that the companies’ creation of AI-powered coding assistant GitHub Copilot relies on “software piracy on an unprecedented scale.” The case is only in its earliest stages but could have a huge effect on the broader world of AI, where companies are making fortunes training software on copyright-protected data.

Copilot, which was unveiled by Microsoft-owned GitHub in June 2021, is trained on public repositories of code scraped from the web, many of which are published with licenses that require anyone reusing the code to credit its creators. Copilot has been found to regurgitate long sections of licensed code without providing credit — prompting this lawsuit that accuses the companies of violating copyright law on a massive scale.

“We are challenging the legality of GitHub Copilot,” said programmer and lawyer Matthew Butterick, who filed the lawsuit with the help of the San Francisco-based Joseph Saveri Law Firm, in a press statement. “This is the first step in what will be a long journey. As far as we know, this is the first class-action case in the US challenging the training and output of AI systems. It will not be the last. AI systems are not exempt from the law. Those who create and operate these systems must remain accountable.”

@github copilot, with "public code" blocked, emits large chunks of my copyrighted code, with no attribution, no LGPL license. For example, the simple prompt "sparse matrix transpose, cs_" produces my cs_transpose in CSparse. My code on left, github on right. Not OK. pic.twitter.com/sqpOThi8nf

— Tim Davis (@DocSparse) October 16, 2022

The lawsuit, which was filed last Friday, is in its early stages. In particular, the court has not yet certified the proposed class of programmers who have allegedly been harmed. But speaking to The Verge, Butterick and lawyers Travis Manfredi and Cadio Zirpoli of the Joseph Saveri Law Firm said they expected the case to have a huge impact on the wider world of generative AI.

Microsoft and OpenAI are far from alone in scraping copyrighted material from the web to train AI systems for profit. Many text-to-image AI, like the open-source program Stable Diffusion, were created in exactly the same way. The firms behind these programs insist that their use of this data is covered in the US by fair use doctrine. But legal experts say this is far from settled law and that litigation like Butterick’s class action lawsuit could upend the tenuously defined status quo.

To find out more about the motivations and reasoning behind the lawsuit, we spoke to Butterick (MB), Manfredi (TM), and Zirpolil (CZ), who explained why they think we’re in the Napster-era of AI and why letting Microsoft use other’s code without attribution could kill the open source movement.

In response to a request for comment, GitHub said: “We’ve been committed to innovating responsibly with Copilot from the start, and will continue to evolve the product to best serve developers across the globe.” OpenAI and Microsoft had not replied to similar requests at the time of publication.

This interview has been edited for clarity and brevity

First, I want to talk about the reaction from the AI community a little bit, from people who are advocates for this technology. I found one comment that I think’s representative of one reaction to this case, which says, “Butterick’s goal here is to kill transformative ML use of data such as source code or images, forever.”

What do you think about that, Matthew? Is that your goal? If not, what is?

Matthew Butterick: I think it’s really simple. AI systems are not magical black boxes that are exempt from the law, and the only way we’re going to have a responsible AI is if it’s fair and ethical for everyone. So the owners of these systems need to remain accountable. This isn’t a principle we’re making out of whole cloth and just applying to AI. It’s the same principle we apply to all kinds of products, whether it’s food, pharmaceuticals, or transportation.

I feel sometimes that the backlash you get from the AI community — and you’re dealing with wonderful researchers, wonderful thinkers — they’re not acclimated to working in this sphere of regulation and safety. It’s always a challenge in technology because regulation moves behind innovation. But in the interim, cases like this fill that gap. That’s part of what a class action lawsuit is about: is testing these ideas and starting to create clarity.

Do you think if you’re successful with your lawsuit that it will have a destructive effect on innovation in this domain, on the creation of generative AI models?

MB: I hope it’s the opposite. I think in technology, we see over and over that products come out that skirt the edges of the law, but then someone comes by and finds a better way to do it. So, in the early 2000s, you had Napster, which everybody loved but was completely illegal. And today, we have things like Spotify and iTunes. And how did these systems arise? By companies making licensing deals and bringing in content legitimately. All the stakeholders came to the table and made it work, and the idea that a similar thing can’t happen for AI is, for me, a little catastrophic. We just saw an announcement recently of Shutterstock setting up a Contributors’ Fund for people whose images are used in training [generative AI], and maybe that will become a model for how other training is done. Me, I much prefer Spotify and iTunes, and I’m hoping that the next generation of these AI tools are better and fairer for everyone and makes everybody happier and more productive.

I take it from your answers that you wouldn’t accept a settlement from Microsoft and OpenAI?

MB: [Laughs] It’s only day one of the lawsuit...

One section of the lawsuit I thought was particularly interesting was on the very close but murkily defined business relationship between Microsoft and OpenAI. You point out that in 2016 OpenAI said it would run its large-scale experiments on Microsoft’s cloud, that Microsoft has exclusive licenses for certain OpenAI products, and that Microsoft has invested a billion dollars in OpenAI, making it both OpenAI’s largest investor and service provider. What is the significance of this relationship, and why did you feel you needed to highlight it?

Travis Manfredi: Well, I would say that Microsoft is trying to use as a perceived beneficial OpenAI as a shield to avoid liability. They’re trying to filter the research through this nonprofit to make it fair use, even though it’s probably not. So we want to show that whatever OpenAI started out as, it’s not that anymore. It’s a for-profit business. Its job is to make money for its investors. It may be controlled by a nonprofit [OpenAI Inc.], but the board of that nonprofit are all business people. We don’t know what their intentions are. But it doesn’t seem to be following the original mission of OpenAI. So we wanted to show — and hopefully discovery will reveal more information about this — that this is a collective scheme between Microsoft, OpenAI, and GitHub that is not as beneficial or as altruistic as they might have us believe.

What do you fear will happen if Microsoft, GitHub, OpenAI, and other players in the industry building generative AI models are allowed to keep using other people's data in this way?

TM: Ultimately, it could be the end of open-source licenses altogether. Because if companies are not going to respect your licenses, what’s the point of even putting it on your code? If it’s going to be snapped up and spit back out without any attribution? We think open-source code has been tremendously beneficial to humanity and to the technology world, and we don’t think AI that doesn’t understand how to code and can only make probabilistic guesses, we don’t think that’s better than the innovation that human coders can deliver.

MB: Yeah, I really do think this is an existential threat to open source. And maybe it’s just my generation, but I’ve seen enough situations when there’s a nice, free community operating on the internet, and someone comes along and says, “Let’s socialize the costs and privatize the profits.”

If you divorce the code from the creators, what does it mean? Let me give you one example. I spoke to an engineer in Europe who said, “Attribution is a really big deal to me because that’s how I get all my clients. I make open source software; people use my packages, see my name on it, and contact me, and I sell them more engineering or support.” He said, “If you take my attribution off, my career is over, and I can’t support my family, I can’t live.” And it really brings home that this is not a benign issue for a lot of programmers.

But do you think there’s a case to be made that tools like Copilot are the future and that they are better for coders in general?

MB: I love AI, and it’s been a dream of mine since I was an eight-year-old playing with a computer that we can teach these machines to reason like we do, and so I think this is a really interesting and wonderful field. But I can only go back to the Napster example: that [these systems] are just the first step, and no matter how much people thought Napster was terrific, it was also completely illegal, and we’ve done a lot better by bringing everyone to the table and making it fair for everybody.

So, what is a remedy that you’d like to see implemented? Some people argue that there is no good solution, that the training datasets are too big, that the AI models are too complex, to really trace attribution and give credit. What do you think of that?

Cadio Zirpoli: We’d like to see them train their AI in a manner which respects the licenses and provides attribution. I’ve seen on chat boards out there that there might be ways for people who don’t want that to opt out or opt in but to throw up your hands and say “it’s too hard, so just let Microsoft do whatever they want” is not a solution we’re willing to live with.

Do you think this lawsuit could set precedence in other media of generative AI? We see similar complaints in text-to-image AI, that companies, including OpenAI, are using copyright-protected images without proper permission, for example.

CZ: The simpler answer is yes.

TM: The DMCA applies equally to all forms of copyrightable material, and images often include attribution; artists, when they post their work online, typically include a copyright notice or a creative commons license, and those are also being ignored by [companies creating] image generators.

So what happens next with this lawsuit? I believe you need to be granted class-action status on this lawsuit for it to go ahead. What timeframe do you think that could happen on?

CZ: Well, we expect Microsoft to bring a motion to dismiss our case. We believe we will be successful, and the case will proceed. We’ll engage in a period of discovery, and then we will move the court for class certification. The timing of that can vary widely with respect to different courts and different judges, so we’ll have to see. But we believe we have a meritorious case and that we will be successful not only overcoming the motion to dismiss but in getting our class certified.

(Except for the headline, this story has not been edited by Leader Desk Team and is published from a syndicated feed.)

/cdn.vox-cdn.com/uploads/chorus_asset/file/25115065/DCD_Avishai_Abrahami.jpg)